[Français]

Sponsor List

|

MUTE experimental results

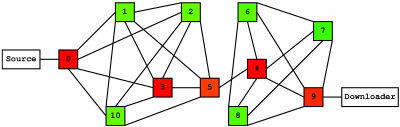

We started 11 MUTE nodes one-by-one and allowed them to discover each other through an additional hostcatcher node. This discovery process is exactly the same as what happens when nodes join a real-world MUTE network. Each node was set to allow a maximum of 5 neighbor connections. After this 11-node network was established, we connected two more nodes to the network: a "source" node that had a test file available for download, and a "downloader" node that would search for and download the test file. We connected these extra nodes to opposite ends of the network. The following map shows the resulting network layout:

We then performed various operations while measuring the message load at each node. In the diagrams below, we use a coloring scheme to represent message loads relative to the node with the maximum load. For example, suppose node 8 had the highest load and processed 12 messages. If node 3 processed only 6 messages, we would say that node 3 had a relative load of 50%, since it processed half as many messages as node 8. We use the following color spectrum to indicate relative loads:

Now we can describe the various operations performed in our experiment. First, the downloader broadcasted a search request for the test file, and the source responded with search results. The following table shows the resulting load:

| Node: | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Messages: | 5 | 5 | 5 | 5 | 5 | 2 | 4 | 3 | 4 | 3 | 5 |

These results are easier to visualize on the network map itself with relative-load coloring:

For this search request, the load is spread evenly across the network, which is what we might expect, since the search request is broadcast to all nodes. Though only one request was sent, each node receive several messages, since it got duplicates from its neighbors. Duplicates are dropped without being passed on or processed further, but they still represent message load. The results are routed back to the downloader along a back-path established by the search request, but this represents only a single message, so its impact is drowned out by the larger impact of the broadcast. The variation in load is due to the variation in the number of neighbors that a node has "upstream" when the search request is broadcast. For example, node 5 has only one neighbor upstream, so it only receives one copy of the search request.

Next, the downloader initiated a transfer of the test file from the source. The file is broken up into chunks, and a request-response pair is routed through the network for each chunk. Our test file was 195 KiB in size and broken up into 9 chunks. The following table and map shows the cumulative load after this operation was complete:

| Node: | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Messages: | 25 | 5 | 5 | 25 | 25 | 22 | 4 | 3 | 4 | 23 | 5 |

We can see that the majority of traffic for this transfer passed through a direct route between the source and the downloader. In fact, after processing the search request, the nodes that were not on the route (called "bystander" nodes) processed no additional messages. Relative to the maximum cumulative load of 25 messages, the load on these bystanders is negligible, and thus they show up as green on the colored map.

The search request served to "discover" the source in the network, and the source's response served to establish a two-way route through the network. After such a route was established, no additional bystander load occurred. Search requests are always broadcasted and never routed, so they certainly make good exploratory messages for establishing routes. If no routes exist, however, nodes default to broadcasting routable messages. In other words, any MUTE message can serve as an exploratory message.

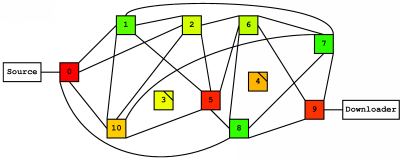

Next, the downloader initiated another transfer of the 9-chunk test file from the source, but this time, we intentionally killed node 3 while the transfer was in progress. The following table and map shows the cumulative load after this operation was complete, along with the new network layout:

| Node: | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Messages: | 47 | 8 | 8 | 33 | 45 | 42 | 4 | 3 | 4 | 43 | 19 |

Node 3 saw additional routed message traffic before it was killed. After the route through node 3 broke, nodes 0 and 5 patched the route locally by by passing the remaining file transfer messages through node 10. Thus, we can see that node 10 carried some of the load, though its load is relatively light compared to the cumulative load of other nodes on the route.

Next, we intentionally killed node 4, another key node along the route. After node 4 was down, the downloader initiated another transfer of the 9-chunk test file from the source. The following table and map shows the cumulative load after this operation was complete, along with the new network layout:

| Node: | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Messages: | 20 | 12 | 29 | 33 | 45 | 65 | 26 | 6 | 7 | 63 | 42 |

With node 4 removed, the patched route is as follows: [9 - 6 - 2 - 5 - 10]. Note that this is not the best patched route, since node 2 is not needed. Node 6 could route messages directly to node 5. This behavior is a consequence of node 6's local decision making: the route that looks best locally may not be best when viewed globally. The best global route would likely be the new 3-node route, [9 - 8 - 0]. Despite these issues, the route was correct and delivered all messaged passed between the source and the destination.

Fresh routes (for example, the first route discovered between the source and the destination in this experiment) are often close to optimal, but repaired routes can be sub-optimal. A MUTE node has the option of requesting a fresh route for any message that it sends into the network. Messages tagged with a fresh route request cause the appropriate routing tables to be flushed, and the message will be broadcast throughout the network to search for a new route. If a MUTE node detects that a route is overly-repaired and no longer close to optimal, it can request a fresh route to improve routing performance.

The current MUTE file sharing implementation uses response timeouts to detect poorly repaired, overloaded, or completely broken routes. If a file sharing node times out waiting for a response from another node, it resends the message with a fresh route request before giving up.